Innovation Micro Studies

AR PERSPECTIVE

AR content into a Real-Time broadcast signal path

AR DEMO

Product Goal:To share live AR demo experiences through multiple broadcast cameras simultaneously from a live event in real time.

What We Made: Created an App process that integrates AR content from an iOS device into a 4K 60fps HDV broadcast pipeline.

Results: We created a proof-of-concept and a development roadmap to expand current live event limitations... The prototype would have allowed guests remotely viewing online or at the event to view the AR demo experience through any of the broadcast camera perspectives.

Touchstone Goals

We were charged with three primary goals and given an awareness of the big picture.

World Alignment: of multiple coordinate systems.

App State: Ability to pass AR related data from and to the demo device.

Render & Composite: Render from the point of view of each camera, including lens intrinsics.

FRIENDVERSARY

Personalized video platform

UNIQUE GENERATIVE VIDEO

Product Goal: Follow-up to the super-popular “Friends Day” video with a new dynamically-generated celebration of Facebook friendships that elevate users’ own content from mere assets into delightful films.

What We Made: A live-action design film (with CG and 2D animation elements) that used Chapeau’s dynamic compositing process to create “live” video files, which were turned into millions of personalized videos.

Results: When it debuted in 2016, there were more than 1 Billion “Friendversary” films made, in 35 languages. Today, more than 200,000 personalized versions of this now-iconographic video are still being rendered daily.

ADDITIONAL GENERATIVE VIDEO

INTEL: ALCHEMIST

Photoreal Realtime GPU Simulation

Ray Tracing with Thread Sorting Demo

Product Goal: Create a Tech demo showing the performance benefit of Intel Alchemist-G10 dGPU vs Nvidia RTX 3060Ti when fully leveraging ray tracing hardware (RTU) and thread sorting capabilities (TSU).

What We Made: Multiple simulations with 100+ objects and 128 different shaders rendered photoreal in realtime. Our demo scaled object count, complexity, and shader count using sliders. 128 x 128 object variables > 100,000 object variables > over a million objects rendered and refracting off one another in realtime

Results: Alchemist (result) maintained a higher level of performance when additional shaders were added than with RTX. Final simulation grows from a single object to a sea of hundred of thousands of particles with the 128 shaders applied randomly and rendered in real time.

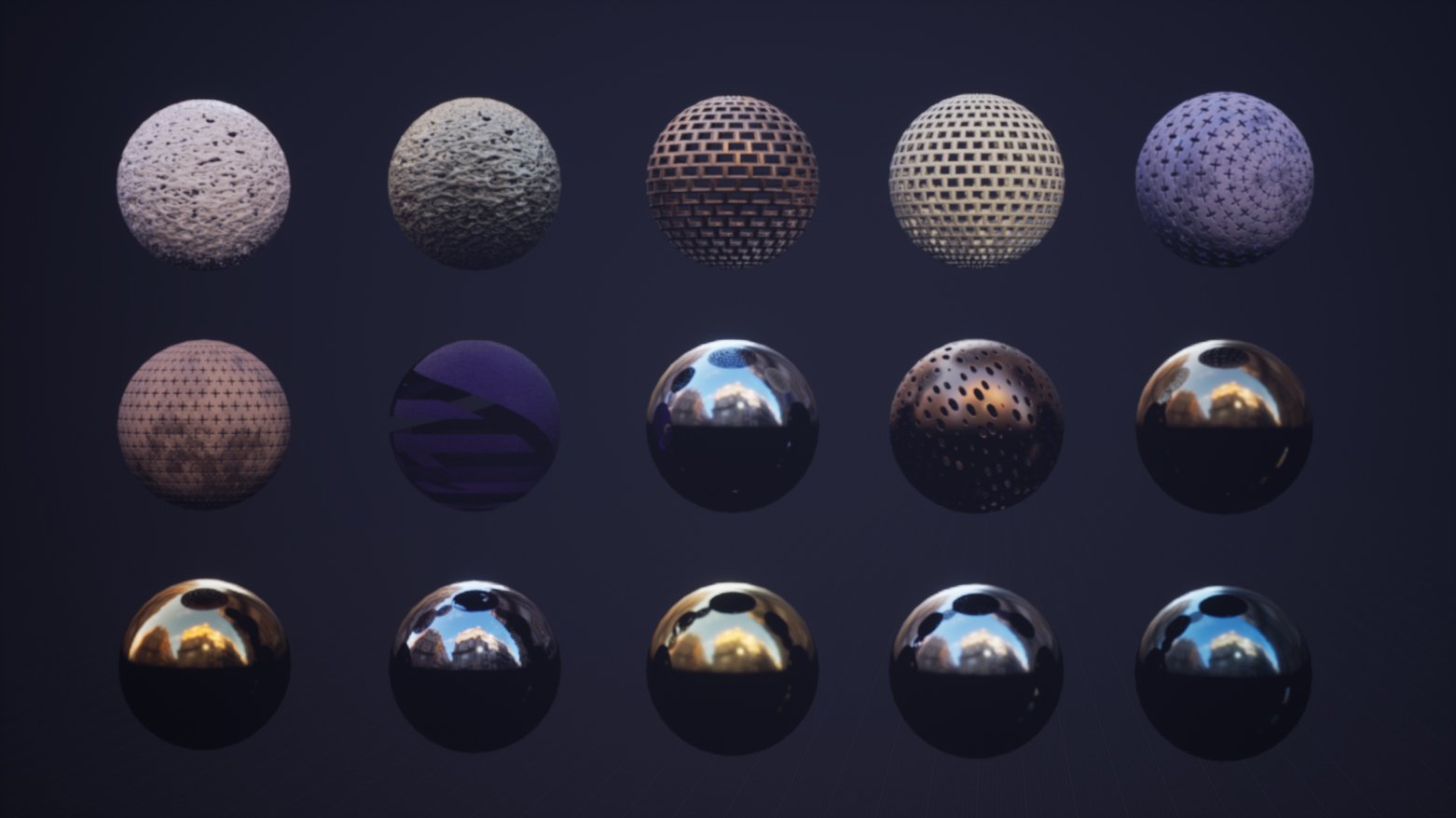

Shader Materials

REQUIREMENTS FOR SHADERS

Create 128 unique shader materials with variation in structure using procedural alpha cut-outs, refractions, multilayer depth materials.

Building The Scenes

OBJECT RENDER

Scenes feature animating swirling cloud of large particles with a 128 different shaders applied to an emitter producing hundreds of thousands of particles.

Final Renders

Selection pulled from endless sim

HUMAN INTERACTION & INDUSTRIAL DESIGN

UI testing and hardware integration

Iteration & Prototyping

Product Goals: To work between software and hardware teams to demonstrate UI and device functionality for designers, developers and engineers. Iterative prototyping helps identify areas where the designers may need to consider alternative firmware or hardware components.

What We Made: 1000+ optimized scene files for iOS X stress testing. Created a process for UI animators to push creative boundaries without thermo throttling the device gpu.

Result: Gracefully smooth iOS finger gesture functionality on dozens of features resulting in more on-device functions.

GEOTRON

Automated Screen Capture for global language versions

OS Application

Product goal: Shorten the wait time for customers in Non-English speaking countries who have to wait before they receive assets in their local language.

What we made: An Apple-specific application that enables Apple’s internal-build devices to auto-generate screen captures in multiple languages pixel perfect without any humans in the loop.

Results: Apple’s internal engineers have estimated that Chapeau’s app supports >87% improvement in the process of creating GEO-accurate device captures over Apple’s current workflow. We turned a 24 day wait into 3.

OPTIMIZED MANUAL PROCESS VS. GEOTRON

NFL: BRONSON

Voice-Animated Virtual Assistant (NFL)

VOICE-RESPONSIVE VIRTUAL ASSISTANT (MOBILE APP INTEGRATION)

Product goal: The NFL wanted to explore voice-driven device interaction for an internal presentation. They needed proof of concept UI films and avatar options, along with robust R&D on tech and creative pipelines.

What we made: As a creative and production partner, Chapeau ideated on multiple looks and animations for the voice-driven avatar (from abstract to human form). The UI films incorporated those explorations into voice-driven scenarios in which the avatar listened, responded, and transported users to a page or experience within the app which answered their query. Utilizing information we gathered from our tech explorations, we delivered a prototype technology pipeline and UX diagrams in addition to design and animation explorations, several avatar variations, and the demo UI films.

Results: The films and avatar explorations successfully showed NFL leadership the promise of voice-driven UI within their products.

NFL MATTHEW ALEXA SKILL CHARACTER DESIGN

Chapeau designed a voice-to-visual workflow while developing the look and animation of “Matthew”, a voice-activated companion for the NFL Lab’s “Rookies Guide” Alexa skill. Visit the NFL’s skill and ask for a definition of “the Statue of Liberty” to see Matthew in action.

RETAIL WALL + IN-STORE SCREENS

Content for Retail Wall and Out Of Home experiences.

WALL EXPERTISE

Product Goal: We were asked to architect and build a high resolution content system to run on enormous proof of concept LED tiles for global retail stores.

What We Made: Using 3 different LED tile manufactures we built a proof of concept video wall for Apples Employee Store at 1 Infinite Loop. We created 8 minutes of 6K animated CG content per quarter to play from a remote RMM cloud server. 125 stores installed walls and we quickly needed to build a transcoding server to auto-transcode 1.7 million 6K frames per quarter into 21 resolutions and 27 languages.

Results: After executive approval our single wall bloomed into 125 video that were integrated into retail stores around the world. Pixel perfect, color accurate 6K 60fps content that can be remotely programmed in realtime from a cloud based RMM.

COLOSSUS

5 year timelapse

Product goal: Apple Park (nicknamed “The Spaceship”) was built to be the most sophisticated technology campus in the world. As a long-term creative collaborator, we were asked to visually capture the enormous scope of the construction process.

What We Made: Originally envisioned as a Timelapse based on drone photography, Chapeau pivoted to CG when drones proved to be too unreliable for the scale of this project. Tens of thousands of CG objects populate the Colossus shot, brought to life in detailed, layered animations that capture the process of building Apple’s mile-wide new headquarters at photoreal quality, from its messy beginnings to its pristine finish.

Results: Apple was thrilled with the level of detail in the execution of the Timelapse. Chapeau received accolades for the work, and we are proud to have been involved in this monumental experience.

ADDITIONAL CONTENT

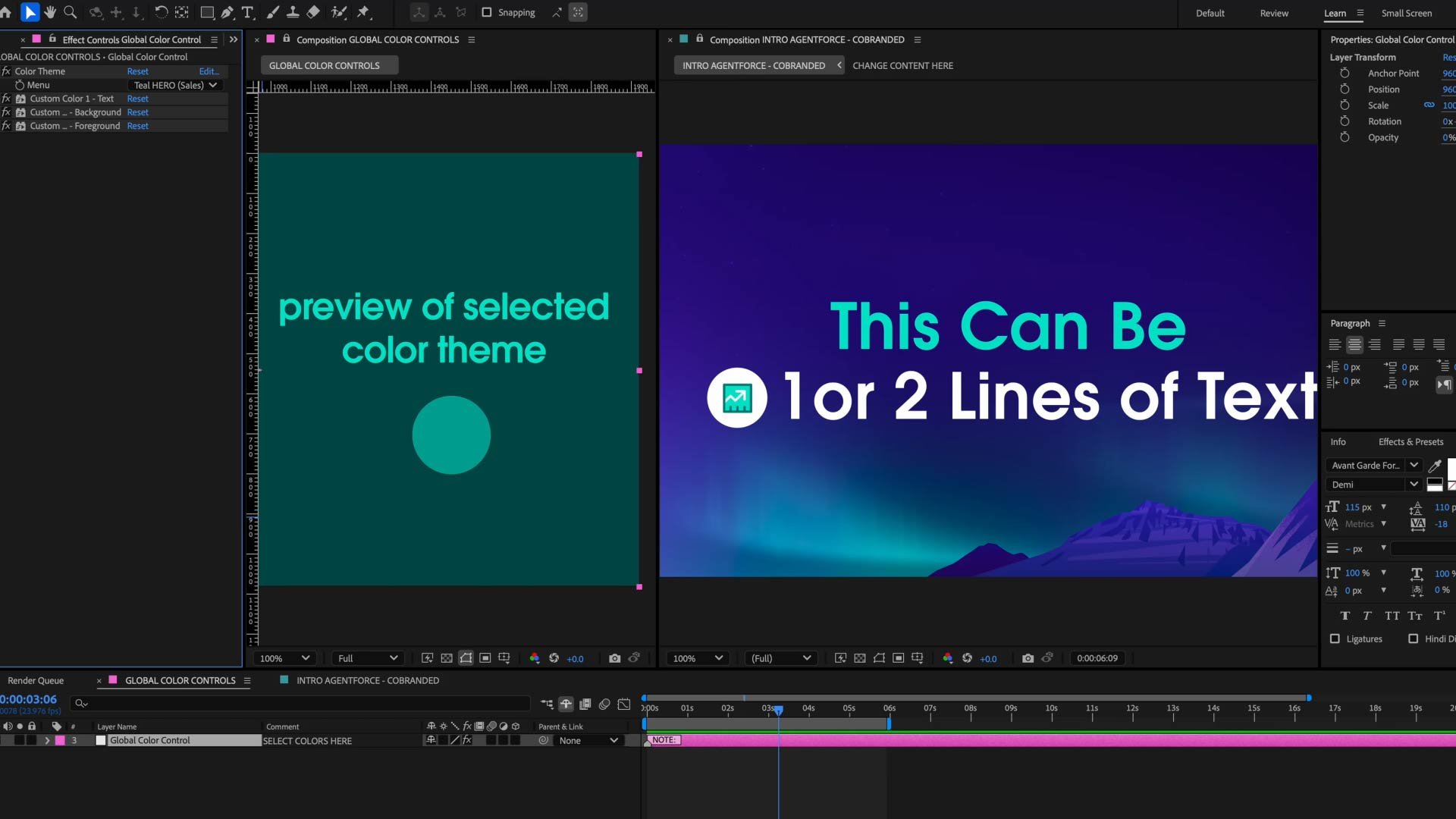

SALESFORCE AUTOMATION TOOLKIT

Automated templatized brand assets

AFX AUTOMATION TOOLKIT

Product goal: Develop agile plug-and-play motion graphic storytelling asset toolkit to deploy across all studios and Salesforce.

What we made: A modular and templatized automated multi-brand demo-video toolkit.

Results: Metrics for success, one control changes hundreds of animations

GLOBAL COLOR PICKER

DYNAMIC CHAT BUBBLE RIG

TYPE / LOGO ANIMATION RIG

CHARACTER & TOY DESIGN

EVA Airways: Character & Toy Design

EVA Airways: Sticker Pack & AR Experience

EVA Air Tasteway To Asia: Experiential Installation

Thank You

Our creative work lives at the intersection of integrated media and innovative technology, and is fueled by our constant drive to do something that's never been done before.

We look forward to collaborating with you. Reach out anytime.