CHAPEAU X SUMERIAN HOST

Highlight Reels

Dynamic Displays

Product Videos

UI

Personalized Videos

INTRODUCTION

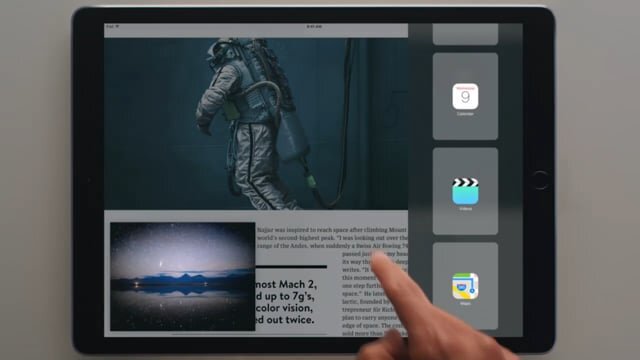

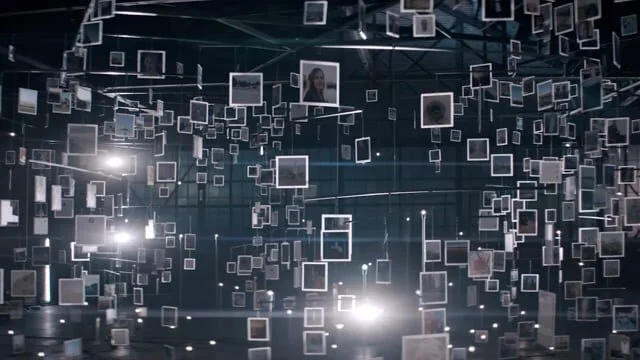

Since Facebook first announced Friends Day in 2015, they have generated millions of unique videos for connected Facebook friends around the world. Dynamic content brings personalized social media to an unprecedented level of visual engagement. At their core, Facebook’s personalized videos marry traditional film production with the digital medium of personal, user-generated content. Chapeau was at the forefront of helping Facebook create this process.

Facebook had a vision to use the video medium to highlight connections between users. They would create high-quality short films, populated by personalized, user-generated content, to elevate users’ experience on the platform. It was an ask at a potential scale into the billions. What does it take to create unique videos for millions (or billions) of users? The scope of the ask only became clear over time, as Chapeau’s technical artists worked with Facebook engineers to create what became a new form of video rendering, which Chapeau and Facebook call Dynamic Compositing.

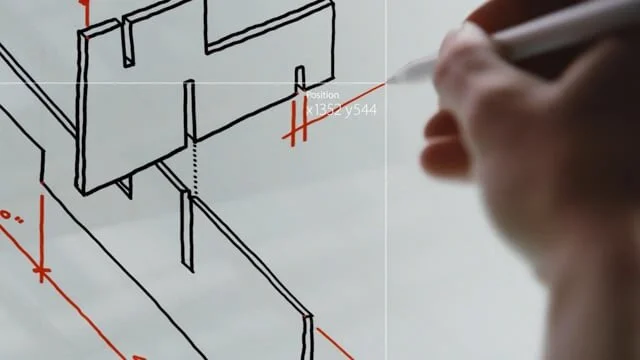

The videos began as an experience focusing mainly on the most visible content type on the platform: user images. The creative ask at the time was to populate user-generated photos into a short film format. Facebook wanted to use high-resolution, high-design, practically-shot live footage to achieve an organic, material quality in their films that is difficult, time-consuming, and expensive to convey using purely CGI video techniques. Guided by our mission to help Facebook execute their vision, Chapeau’s research and development process identified a need: we had to design a way to create a simplified data output from a streamlined base video project file. Developing this new data parameter and slim-layer templates from complex Dynamic video files became a core challenge our technical artists would solve.

Over the course of several video concepts, additional dynamic content types became entwined with story, which continually presented unique challenges to an already complicated workflow. Chapeau was able to bridge creative and technical disciplines, iterating with Facebook engineers to identify and build a high-end, 3-layer compositing process designed to render quickly while maintaining Facebook’s high production standards. Less layers means smaller files and faster turnaround to more individual users. Essentially, smaller file sizes enable more sharing; sharing builds community, which is what Facebook is all about.

EARLY WORK

ICONIC WORK

Alexa Avatar

INTRODUCTION

NFL originally approached us while creating their first Alexa Skill, “Rookie’s Guide to the NFL”, with the desire to create an Alexa-linked 3D avatar with a more customized, branded look than offered by the current AWS offering, Sumerian Host. They wanted to keep their Polly→Sumerian Host workflow intact, while raising the production value and unique look of the avatar. With Chapeau’s background in traditional video production techniques, along with our unique tech-forward outlook on content display and development, we researched and designed a new workflow to help NFL devise their own face for Alexa — or in this case, “Matthew".

Together, Chapeau + NFL designed a workflow that would take full advantage of AWS's text-to-speech system. Scripts were entered into Polly, which outputs an .MP3 and viseme instructions in .JSON format. This is where we diverted from

Sumerian Host and created our own workflow. In order to create an elevated product with an intuitive workflow, we focused our research and design efforts on pre-production. There are several layers of visual effects work that need to be built before an avatar begins its look development process. Specific character rigging and a toolkit of ambient body animations are key to making this whole process run efficiently and smoothly.

From here, we can plug the Polly exports into our system and generate lip-sync accurate animations on masterfully-rendered avatars. The process of animating lips by hand is a real killer, which can take weeks to nail a 10 second clip. Our system will do it in seconds. Since the devices that support video and images files for Alexa currently require pre-rendered files (much like a traditional video medium), we optimized this workflow and the technology that runs it for speed-to-deliver. We can build and render the hundreds of files needed for a Skill in a production timeline accelerated literally by years. Yes it sounds impossible, but we figured it out. 800 pre-rendered animated films could take 4 years through a traditional animation workflow (roughly the schedule of a PIXAR feature). Through our system, we estimate that the timetable could be compressed to 4-6 months to develop custom avatars for a given Skill.